Gdax You Can Try Again in 1 Day.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Incremental refresh and real-time information for datasets

Incremental refresh extends scheduled refresh operations past providing automated partition cosmos and direction for dataset tables that oft load new and updated data. For most datasets, this is one or more tables that contain transaction data that changes oft and can grow exponentially, like a fact table in a relational or star database schema. An incremental refresh policy to partition the table, refreshing merely the near contempo import division(southward), and optionally leveraging an additional DirectQuery partition for real-time data can significantly reduce the amount of data that has to be refreshed while at the same fourth dimension ensuring that even the latest changes at the information source are included in the query results.

With incremental refresh and real-time data:

- Fewer refresh cycles for fast-changing data – DirectQuery mode gets the latest information updates as queries are processed without requiring a high refresh cadency.

- Refreshes are faster - But the almost recent data that has inverse needs to exist refreshed.

- Refreshes are more reliable - Long-running connections to volatile data sources aren't necessary. Queries to source data run faster, reducing potential for network bug to interfere.

- Resource consumption is reduced - Less information to refresh reduces overall consumption of memory and other resource in both Power BI and data source systems.

- Enables big datasets - Datasets with potentially billions of rows tin abound without the need to fully refresh the entire dataset with each refresh functioning.

- Piece of cake setup - Incremental refresh policies are defined in Power BI Desktop with just a few tasks. When published, the service automatically applies those policies with each refresh.

When you lot publish a Ability BI Desktop model to the service, each table in the new dataset has a single sectionalization. That single division contains all rows for that tabular array. If the table is large, say with tens of millions of rows or even more, a refresh for that table tin can take a long fourth dimension and consume an excessive corporeality of resources.

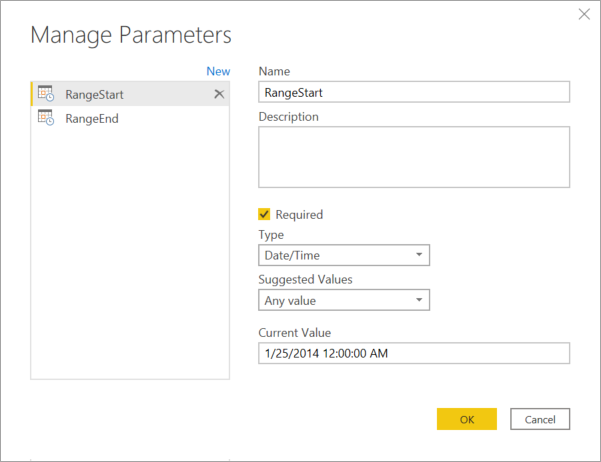

With incremental refresh, the service dynamically partitions and separates data that needs to be refreshed oft from data that can be refreshed less frequently. Table data is filtered past using Ability Query engagement/time parameters with the reserved, case-sensitive names RangeStart and RangeEnd. When initially configuring incremental refresh in Power BI Desktop, the parameters are used to filter but a small period of data to exist loaded into the model. When published to the service, with the get-go refresh operation, the service creates incremental refresh and historical partitions and optionally a real-time DirectQuery sectionalisation based on incremental refresh policy settings, then overrides the parameter values to filter and query data for each partition based on date/time values for each row.

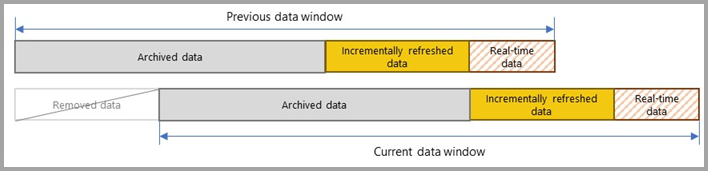

With each subsequent refresh, the query filters return only those rows inside the refresh menstruum dynamically defined past the parameters. Those rows with a engagement/time within the refresh catamenia are refreshed. Rows with a date/time no longer within the refresh menses then go role of the historical menses, which is not refreshed. If a real-fourth dimension DirectQuery partition is included in the incremental refresh policy, its filter is also updated so that it picks up any changes that occurred after the refresh period. Both the refresh and historical periods are rolled forward. Equally new incremental refresh partitions are created, refresh partitions no longer in the refresh period become historical partitions. Over time, historical partitions become less granular as they are merged together. When a historical partition is no longer in the historical menses defined by the policy, it is removed from the dataset entirely. This is known as a rolling window pattern.

The beauty of incremental refresh is the service handles all of this for you based on the incremental refresh policies you define. In fact, the process and partitions created from it are not even visible in the service. In most cases, a well-defined incremental refresh policy is all that is necessary to significantly improve dataset refresh performance. However, the existent-time DirectQuery partition is but supported for datasets in Premium capacities, Power BI Premium besides enables more advanced sectionalization and refresh scenarios through the XMLA endpoint.

Requirements

Supported plans

Incremental refresh is supported for Power BI Premium, Premium per user, Power BI Pro, and Ability BI Embedded datasets.

Getting the latest data in real time with DirectQuery is merely supported for Power BI Premium, Premium per user, and Ability BI Embedded datasets.

Supported data sources

Incremental refresh and real-time data works best for structured, relational data sources, like SQL Database and Azure Synapse, merely tin too work for other information sources. In any case, your data source must support the following:

Appointment cavalcade - The table must comprise a appointment column of date/fourth dimension or integer data type. The RangeStart and RangeEnd parameters (which must be date/time data type) filter table data based on the engagement cavalcade. For date columns of integer surrogate keys in the form of yyyymmdd, y'all can create a function that converts the date/time value in the RangeStart and RangeEnd parameters to match the integer surrogate keys of the date cavalcade. To learn more than, come across Configure incremental refresh - Convert DateTime to integer.

Query folding - Incremental refresh is designed for data sources that support query folding, which is Power Query'southward ability to generate a single query expression to recollect and transform source information, especially if getting the latest data in real time with DirectQuery. Most data sources that back up SQL queries support query folding. Information sources like flat files, blobs, and some spider web feeds oft do not.

When incremental refresh is configured, a Power Query expression that includes a date/time filter based on the RangeStart and RangeEnd parameters is executed against the data source. The filter is in effect a transformation included in the query that defines a WHERE clause based on the parameters. In cases where the filter is not supported by the data source, information technology cannot exist included in the query expression. If the incremental refresh policy includes getting real-fourth dimension data with DirectQuery, non-folding transformations can't exist used. If it'southward a pure import style policy without real-time information, the query mashup engine might compensate and utilise the filter locally, which requires retrieving all rows for the table from the data source. This tin can crusade incremental refresh to be slow, and the process can run out of resource either in the Ability BI service or in an On-premises Data Gateway - effectively defeating the purpose of incremental refresh.

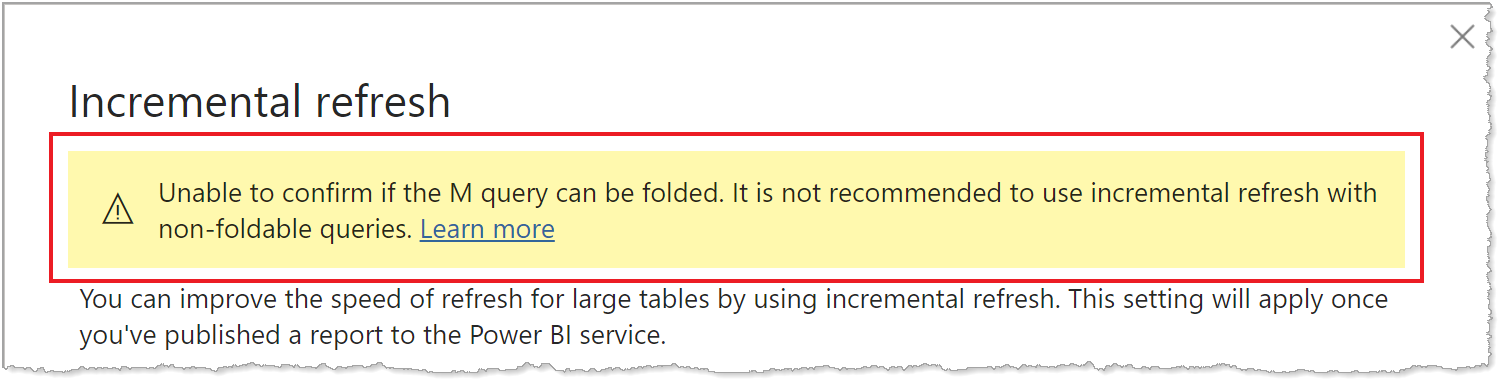

Because support for query folding is different for dissimilar types of information sources, verification should be performed to ensure the filter logic is included in the queries being executed confronting the information source. In virtually cases, Power BI Desktop attempts to perform this verification for you when defining the incremental refresh policy. For SQL based data sources such as SQL Database, Azure Synapse, Oracle, and Teradata, this verification is reliable. However, other data sources may be unable to verify without tracing the queries. If Ability BI Desktop is unable to ostend, a alert is shown in the Incremental refresh policy configuration dialog.

If you see this warning and want to verify the necessary query folding is occurring, use the Ability Query Diagnostics feature or trace queries by using a tool supported past the data source, similar SQL Profiler. If query folding is not occurring, verify the filter logic is included in the query being passed to the data source. If not, information technology's likely the query includes a transformation that prevents folding.

Before configuring your incremental refresh solution, be sure to thoroughly read and sympathise Query folding guidance in Power BI Desktop and Ability Query query folding. These articles can help y'all determine if your data source and queries support query folding.

Single data source

When configuring incremental refresh and existent-time data by using Power BI Desktop, or configuring an advanced solution by using Tabular Model Scripting Language (TMSL) or Tabular Object Model (TOM) through the XMLA endpoint, all partitions whether import or DirectQuery must query information from a unmarried source.

Other data source types

Past using boosted custom query functions and query logic, incremental refresh tin be used with other types of data sources provided filters based on RangeStart and RangeEnd can be passed in a unmarried query. For example, Excel workbook files stored in a binder, files in SharePoint, or RSS feeds. Proceed in mind these are advanced scenarios that require additional customization and testing beyond what is described here. Exist sure to check the Community section later in this article for suggestions on how you lot tin find more info about using incremental refresh for unique scenarios like these.

Time limits

Regardless of incremental refresh, Power BI Pro datasets have a refresh fourth dimension limit of ii hours and practise non support getting real-time data with DirectQuery. For datasets in a Premium chapters, the time limit is v hours. Refresh operations are procedure and memory intensive. A full refresh operation can apply equally much as double the amount of memory required by the dataset alone because the service maintains a snapshot of the dataset in retentiveness until the refresh operation is complete. Refresh operations can also be procedure intensive, consuming a meaning amount of available CPU resources. Refresh operations must also rely on volatile connections to data sources, and the ability of those data source systems to speedily return query output. The time limit is a safeguard to limit over-consumption of your bachelor resource.

Because incremental refresh optimizes refresh operations at the partition level in the dataset, resources consumption can be significantly reduced. At the same fourth dimension, even with incremental refresh, unless through the XMLA endpoint, refresh operations are bound by those same two and five-hour limits. An effective incremental refresh policy not just reduces the amount of data processed with a refresh functioning, merely also reduces the amount of unnecessary historical information stored in your dataset.

Queries can too be limited by a default time limit for the data source. Most relational data sources allow overriding time limits in the Power Query M expression. For instance, the expression below uses the SQL Server data-admission function to set up CommandTimeout to 2 hours. Each period defined by the policy ranges submits a query observing the control timeout setting.

let Source = Sql.Database("myserver.database.windows.net", "AdventureWorks", [CommandTimeout=#duration(0, 2, 0, 0)]), dbo_Fact = Source{[Schema="dbo",Particular="FactInternetSales"]}[Data], #"Filtered Rows" = Table.SelectRows(dbo_Fact, each [OrderDate] >= RangeStart and [OrderDate] < RangeEnd) in #"Filtered Rows" For very large datasets in Premium capacities that will likely contain billions of rows, the initial refresh operation can be bootstrapped. Bootstrapping allows the service to create table and partition objects for the dataset, but non load and procedure information into any of the partitions. Past using SQL Server Management Studio, partitions tin then exist processed individually, sequentially, or in parallel that can both reduce the corporeality of information returned in a unmarried query, only too featherbed the five-hour time limit. To learn more than, see Advanced incremental refresh - Prevent timeouts on initial full refresh.

Current date and fourth dimension

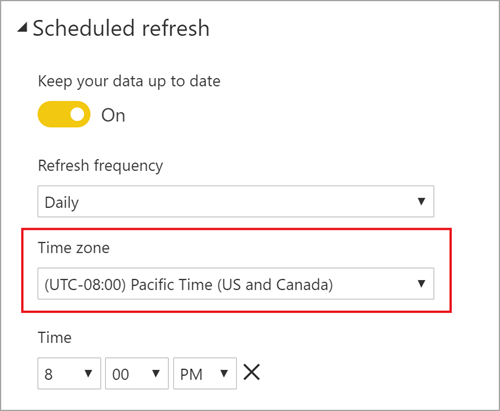

The current engagement and time is based on the system engagement at the time of refresh. If scheduled refresh is enabled for the dataset in the service, the specified time zone will exist taken into account when determining the current date and time. Both individual and scheduled refreshes through the service find the fourth dimension zone if bachelor. For example, a refresh that occurs at eight PM Pacific Fourth dimension (U.s.a. and Canada) with a time zone specified will determine the electric current date and time based on Pacific Fourth dimension, non GMT (which would otherwise exist the adjacent solar day). Refresh operations not invoked through the service, such as the TMSL refresh control, will not consider the scheduled refresh time zone.

Configuring incremental refresh and real-fourth dimension information

Nosotros'll go over important concepts of configuring incremental refresh and real-time data here. When yous're ready for more detailed step-by-stride instructions, exist sure to bank check out Configure incremental refresh and real-fourth dimension information for datasets.

Configuring incremental refresh is done in Ability BI Desktop. For most models, merely a few tasks are required. Nonetheless, go on the following in heed:

- When published to the service, y'all can't publish the same model once more from Power BI Desktop. Republishing would remove any existing partitions and data already in the dataset. If you're publishing to a Premium capacity, subsequent metadata schema changes can be made with tools such as the open-source ALM Toolkit or by using Tabular Model Scripting Language (TMSL). To learn more, see Advanced incremental refresh - Metadata-simply deployment.

- When published to the service, y'all can't download the dataset back as a PBIX to Ability BI Desktop. Because datasets in the service can abound and then large, it's impractical to download back and open on a typical desktop computer.

- When getting real-time data with DirectQuery, you can't publish the dataset to a not-Premium workspace. Incremental refresh with real-fourth dimension information is merely supported with Power BI Premium.

Create parameters

When configuring incremental refresh in Ability BI Desktop, you lot first create two Power Query date/fourth dimension parameters with the reserved, instance-sensitive names RangeStart and RangeEnd. These parameters, defined in the Manage Parameters dialog in Power Query Editor are initially used to filter the data loaded into the Ability BI Desktop model table to include only those rows with a date/time within that period. After the model is published to the service, RangeStart and RangeEnd are overridden automatically by the service to query data defined by the refresh period specified in the incremental refresh policy settings.

For example, our FactInternetSales information source tabular array averages 10k new rows per day. To limit the number of rows initially loaded into the model in Power BI Desktop, nosotros specify a ii-twenty-four hours flow betwixt RangeStart and RangeEnd.

Filter information

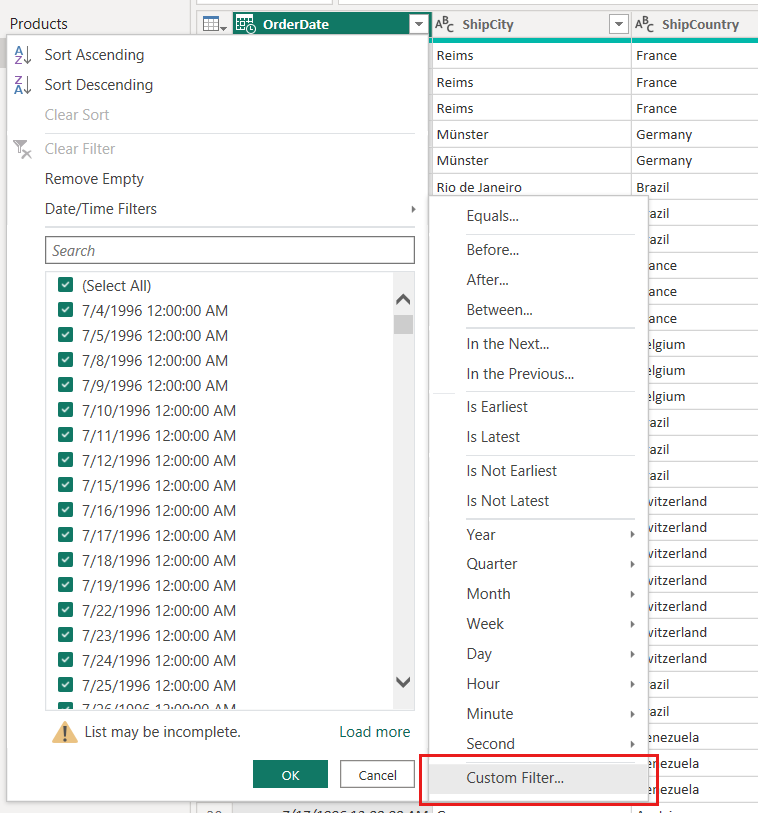

With RangeStart and RangeEnd parameters defined, yous then employ custom Date filters on your tabular array'south appointment column. The filters y'all apply select a subset of data that will be loaded into the model when you click Utilise.

Using our FactInternetSales instance, after creating filters based on the parameters and applying steps, two days of data, roughly 20k rows is loaded into our model.

Define policy

After filters have been applied and a subset of data has been loaded into the model, you lot and then ascertain an incremental refresh policy for the table. After the model is published to the service, the policy is used past the service to create and manage table partitions and perform refresh operations. To define the policy, you will use the Incremental refresh and real-time data dialog box to specify both required settings and optional settings.

Table

The Select table listbox defaults to the table you select in Information view. Enable incremental refresh for the tabular array with the slider. If the Power Query expression for the tabular array doesn't include a filter based on the RangeStart and RangeEnd parameters, the toggle is disabled.

Required settings

The Annal data starting before refresh appointment setting determines the historical flow in which rows with a date/time in that period are included in the dataset, plus rows for the current incomplete historical period, plus rows in the refresh period upwardly to the current date and fourth dimension.

For example, if nosotros specify 5 years, our tabular array will store the terminal five whole years of historical data in year partitions, plus rows for the current year in quarter, month, or day partitions, up to and including the refresh flow.

For datasets in Premium capacities, backdated historical partitions can be selectively refreshed at a granularity determined by this setting. To acquire more, run across Advanced incremental refresh - Partitions.

The Incrementally refresh data starting before refresh appointment setting determines the incremental refresh period in which all rows with a date/fourth dimension in that period are included in the refresh partition(s) and refreshed with each refresh operation.

For instance, if we specify a refresh period of iii days, with each refresh performance the service overrides the RangeStart and RangeEnd parameters to create a query for rows with a appointment/fourth dimension within a three-day menstruation, offset and catastrophe dependent on the current engagement and time. Rows with a date/time in the last 3 days up to the current refresh operation time are refreshed. With this type of policy, we can expect our FactInternetSales dataset tabular array in the service, which averages 10k new rows per day, to refresh roughly 30k rows with each refresh operation.

Be sure to specify a period that includes simply the minimum number of rows required to ensure accurate reporting. If defining policies for more than one table, the same RangeStart and RangeEnd parameters must be used fifty-fifty if different store and refresh periods are defined for each table.

Optional settings

The Get the latest data in existent time with DirectQuery (Premium simply) setting enables fetching the latest changes from the selected table at the information source beyond the incremental refresh period by using DirectQuery. All rows with a date/time later than the incremental refresh period are included in a DirectQuery partition and fetched from the data source with every dataset query.

For example, if enabled, with each refresh performance the service still overrides the RangeStart and RangeEnd parameters to create a query for rows with a date/time after the refresh menstruum, beginning dependent on the current appointment and time. Rows with a date/fourth dimension after the current refresh performance fourth dimension are included. With this type of policy, we tin await our FactInternetSales dataset table in the service to include even the latest data updates.

The Only refresh complete days ensures all rows for the entire 24-hour interval are included in the refresh performance. This setting is optional unless you enable the Get the latest data in existent time with DirectQuery (Premium just) setting. Allow'southward say your refresh is scheduled to run at four:00 AM every morning time. If new rows of data appear in the data source table during those 4 hours betwixt midnight and 4:00 AM, yous do not want to business relationship for them. Some business organization metrics like barrels per day in the oil and gas industry make no sense with fractional days. Some other example is refreshing information from a financial system where data for the previous month is canonical on the 12th calendar day of the month. You could set the refresh period to 1 month and schedule the refresh to run on the twelfth day of the month. With this choice selected, it would, for example, refresh January data on February 12.

Proceed in heed, unless scheduled refresh is configured for a non-UTC time zone, refresh operations in the service run under UTC time, which can determine the effective date and issue consummate periods.

The Detect data changes setting enables even more than selective refresh. You tin select a date/time column used to place and refresh only those days where the data has changed. This assumes such a column exists in the data source, which is typically for auditing purposes. This should not be the aforementioned column used to partition the data with the RangeStart and RangeEnd parameters. The maximum value of this column is evaluated for each of the periods in the incremental range. If information technology hasn't changed since the terminal refresh, there's no need to refresh the period. In this case, this could potentially farther reduce the days incrementally refreshed from 3 to i.

The current blueprint requires that the column to detect data changes is persisted and cached into memory. The following techniques tin be used to reduce cardinality and memory consumption:

- Persist just the maximum value of the column at time of refresh, perhaps by using a Ability Query office.

- Reduce the precision to an acceptable level, given your refresh-frequency requirements.

- Define a custom query for detecting information changes by using the XMLA endpoint and avoid persisting the column value altogether.

In some cases, enabling the Detect data changes pick can be further enhanced. For example, you may want to avoid persisting a concluding-update column in the in-retentiveness cache, or enable scenarios where a configuration/instruction table is prepared by ETL processes for flagging merely those partitions that need to be refreshed. In cases like these, for Premium capacities, apply Tabular Model Scripting Linguistic communication (TMSL) and/or the Tabular Object Model (TOM) to override the discover data changes beliefs. To learn more, see Avant-garde incremental refresh - Custom queries for detect data changes.

Publish

After configuring the incremental refresh policy, you publish the model to the service. When publishing is consummate, you tin perform the initial refresh performance on the dataset.

Note

Datasets with an incremental refresh policy to get the latest data in existent time with DirectQuery tin can only be published to a Premium workspace.

For datasets published to workspaces assigned to Premium capacities, if y'all recall the dataset will grow beyond 1 GB or more, yous can better refresh functioning performance and ensure the dataset doesn't max out size limits by enabling Large dataset storage format before performing the offset refresh performance in the service. To learn more, see Large datasets in Power BI Premium.

Of import

Subsequently being published to the service, yous cannot download the PBIX back.

Refresh

After publishing to the service, you perform an initial refresh functioning on the dataset. This should exist an private (manual) refresh then you tin can monitor progress. The initial refresh functioning can take quite a while to consummate. Partitions must be created, historical information loaded, objects such every bit relationships and hierarchies are built or rebuilt, and calculated objects are recalculated.

Subsequent refresh operations, either private or scheduled are much faster because only the incremental refresh sectionalization(southward) is refreshed. Other processing operations must all the same occur, similar merging partitions and recalculation, but it usually takes only a small fraction of time compared to the initial refresh.

Automated study refresh

For reports using a dataset with an incremental refresh policy to get the latest data in real time with DirectQuery, information technology'south a good idea to enable automatic page refresh at a fixed interval or based on modify detection then that the reports include the latest information without delay. To larn more than, run across Automatic page refresh in Ability BI.

Avant-garde incremental refresh

If your dataset is on a Premium capacity with the XMLA endpoint enabled, incremental refresh can be further extended for advanced scenarios. For instance, y'all tin use SQL Server Management Studio to view and manage partitions, bootstrap the initial refresh operation, or refresh backdated historical partitions. To learn more, see Advanced incremental refresh with the XMLA endpoint.

Community

Power BI has a vibrant customs where MVPs, BI pros, and peers share expertise in word groups, videos, blogs and more. When learning virtually incremental refresh, exist sure to check out these additional resource:

- Power BI Community

- Search "Ability BI incremental refresh" on Bing

- Search "Incremental refresh for files" on Bing

- Search "Go on existing information using incremental refresh" on Bing

Next steps

Configure incremental refresh for datasets

Avant-garde incremental refresh with the XMLA endpoint

Troubleshoot incremental refresh

Incremental refresh for dataflows

Feedback

Submit and view feedback for

depasqualedoperelpland.blogspot.com

Source: https://docs.microsoft.com/en-us/power-bi/connect-data/incremental-refresh-overview

0 Response to "Gdax You Can Try Again in 1 Day."

Post a Comment